- Hands-on review finds Chrome’s Auto Browse AI agent falls short in real-world tasks

- The tool reportedly struggles with basic web actions and multi-step flows

- Implications for users and developers: test automations and keep manual fallbacks

- Google will likely need iterative fixes before broad adoption

What happened

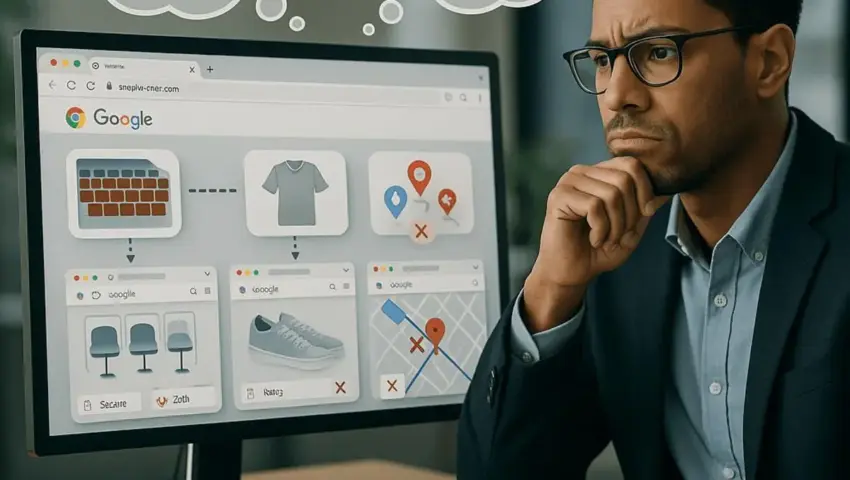

A recent hands-on review of Google’s Auto Browse AI agent — a new automation feature in Chrome — concluded that the tool struggles to complete many common web tasks in real-world conditions. Reviewers found the agent unreliable when faced with routine page changes, unpredictable site behavior, and workflows that require multiple steps.

Why the problem matters

Browser-based automation promises big productivity gains: filling forms, navigating sites, and stitching simple workflows together without manual effort. When an automation agent fails unpredictably, it creates bigger problems than doing the task manually — wasted time, broken processes, and potential data errors. That makes reliability essential before people and teams commit to an AI agent for day-to-day browsing tasks.

Key limitations noted in the review

The hands-on testing highlighted a few recurring weaknesses (as reported): the agent had trouble adapting to minor page layout changes, managing longer multi-step tasks reliably, and recovering from unexpected errors. Those weaknesses mean Auto Browse can be brittle outside carefully controlled demos.

Reactions and likely impact

Early adopters and developers who were hoping to offload repetitive browser tasks should treat the tool with caution. The review’s findings will likely slow broad adoption until Google addresses stability and robustness. At the same time, the existence of Auto Browse signals Google’s investment in embedding AI directly into browser workflows — and that alone will keep interest high among developers and automation teams watching for improvements.

Practical advice for users and teams

– Test any Auto Browse flows on the exact pages and scenarios you need before replacing manual steps.

– Keep manual fallbacks or script-based automation (Selenium, Playwright, RPA tools) for critical processes.

– Expect iterative updates: monitor Chrome release notes and community forums for fixes and best-practice patterns.

– Limit early use to low-risk, non-critical tasks until stability improves.

What’s next

Google is likely to iterate on the Auto Browse agent after early feedback. Improvements typically come through patch releases, expanded test coverage, and better handling of edge cases. For now, the hands-on review serves as a reminder: promising AI features can still be fragile in the messy, unpredictable conditions of the real web.

If you rely on browser automation, treat Auto Browse as an experimental tool — test it thoroughly, assume occasional failures, and plan fallbacks. As Google refines the agent, its value will grow; but today, the review suggests it’s not yet ready to replace established automation methods.

Image Referance: https://www.techbuzz.ai/articles/google-s-auto-browse-ai-agent-falls-short-in-real-world-tests