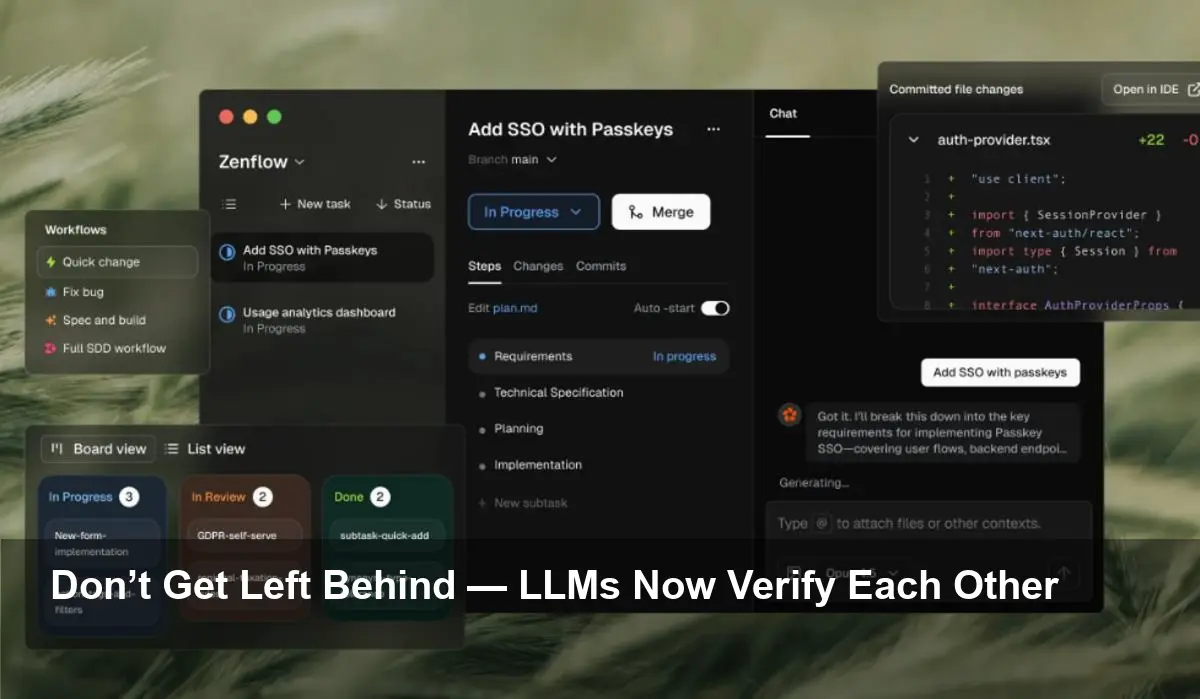

- Zencoder’s new product, Zenflow, orchestrates multiple large language models (LLMs) to verify each other’s code and outputs.

- The platform applies automated peer-review and verification loops to reduce errors and accelerate AI-driven code automation.

- Zenflow is designed to integrate into developer workflows and CI/CD pipelines, giving teams optional human oversight while scaling automation.

Zenflow uses LLMs to check LLMs — a new layer of automation safety

Zencoder’s Zenflow introduces a verification-first approach to AI code automation by having multiple LLMs review and validate each other’s work. The company says the system creates automated peer-review loops that flag inconsistencies, reduce obvious mistakes and raise confidence in AI-produced code before it moves into production.

How Zenflow’s multi-LLM verification works

Zenflow orchestrates several AI agents to take independent passes at a coding task and then compares their outputs. When discrepancies appear, additional verification steps run to determine the most reliable result or to escalate the issue for human review. The platform is designed to be inserted into existing development workflows and CI/CD pipelines so teams can adopt automated verification without replacing their current toolchain.

Key capabilities

- Multi-agent review: Multiple LLMs independently generate and review code snippets or automation steps.

- Automated verification loops: Zenflow triggers re-checks and cross-validation when agents disagree.

- Human-in-the-loop options: Teams can configure thresholds that require a developer to sign off on uncertain changes.

- Integrations-ready: Built to slot into standard dev pipelines and automation platforms, reducing friction for adoption.

Why this matters for teams and businesses

As enterprises push more code generation and automation to AI, concerns around correctness, security and maintainability grow. Zenflow’s approach directly targets those fears by embedding a verification layer that increases confidence in AI outputs. This can shorten review cycles, reduce manual debugging, and make it safer to scale AI-driven development across teams.

Potential impacts

- Faster delivery: By catching obvious errors earlier, teams can move from prototype to production more quickly.

- Better quality: Cross-checking outputs reduces the risk of introducing bugs and regressions from AI-generated code.

- Scalable oversight: Organizations can dial human review up or down depending on risk tolerance.

Market context and what’s next

Zenflow’s multi-LLM verification arrives amid a surge of tools focused on making AI-generated code more reliable. While Zencoder positions the product as a safety and productivity layer, its real-world impact will depend on integration ease, latency, model costs and measurable accuracy improvements in production settings. Early adopters and observers will be watching closely to see whether peer verification becomes a standard practice for AI-assisted development.

No social media embeds or YouTube videos were present in the source material supplied for this summary.

Image Referance: https://siliconangle.com/2025/12/16/zencoders-zenflow-gets-llms-verify-others-work-accelerate-ai-code-automation/